🔐 Brave New Trusted Boot World 🚀

This document looks at the boot process of general purpose Linux

distributions. It covers the status quo and how we envision Linux boot

to work in the future with a focus on robustness and simplicity.

This document will assume that the reader has comprehensive

familiarity with TPM 2.0 security chips and their capabilities (e.g.,

PCRs, measurements, SRK), boot loaders, the shim binary, Linux,

initrds, UEFI Firmware, PE binaries, and SecureBoot.

Problem Description

Status quo ante of the boot logic on typical Linux distributions:

-

Most popular Linux distributions generate initrds locally, and

they are unsigned, thus not protected through SecureBoot (since that

would require local SecureBoot key enrollment, which is generally

not done), nor TPM PCRs.

-

Boot chain is typically Firmware →

shim → grub → Linux kernel →

initrd (dracut or similar) → root file system

-

Firmware’s UEFI SecureBoot protects shim, shim’s key management

protects grub and kernel. No code signing protects initrd. initrd

acquires the key for encrypted root fs from the user (or

TPM/FIDO2/PKCS11).

-

shim/grub/kernel is measured into TPM PCR 4, among other stuff

-

EFI TPM event log reports measured data into TPM PCRs, and can be

used to reconstruct and validate state of TPM PCRs from the used

resources.

-

No userspace components are typically measured, except for what IMA

measures

-

New kernels require locally generating new boot loader scripts and

generating a new initrd each time. OS updates thus mean fragile

generation of multiple resources and copying multiple files into the

boot partition.

Problems with the status quo ante:

-

initrd typically unlocks root file system encryption, but is not

protected whatsoever, and trivial to attack and modify offline

-

OS updates are brittle: PCR values of grub are very hard to

pre-calculate, as grub measures chosen control flow path, not just

code images. PCR values vary wildly, and OS provided resources are

not measured into separate PCRs. Grub’s PCR measurements might be

useful up to a point to reason about the boot after the fact, for

the most basic remote attestation purposes, but useless for

calculating them ahead of time during the OS build process (which

would be desirable to be able to bind secrets to future expected PCR

state, for example to bind secrets to an OS in a way that it remain

accessible even after that OS is updated).

-

Updates of a boot loader are not robust, require multi-file updates

of ESP and boot partition, and regeneration of boot scripts

-

No rollback protection (no way to cryptographically invalidate

access to TPM-bound secrets on OS updates)

-

Remote attestation of running software is needlessly complex since

initrds are generated locally and thus basically are guaranteed to

vary on each system.

-

Locking resources maintained by arbitrary user apps to TPM state

(PCRs) is not realistic for general purpose systems, since PCRs will

change on every OS update, and there’s no mechanism to re-enroll

each such resource before every OS update, and remove the old

enrollment after the update.

-

There is no concept to cryptographically invalidate/revoke secrets

for an older OS version once updated to a new OS version. An

attacker thus can always access the secrets generated on old OSes if

they manage to exploit an old version of the OS — even if a newer

version already has been deployed.

Goals of the new design:

-

Provide a fully signed execution path from firmware to

userspace, no exceptions

-

Provide a fully measured execution path from firmware to

userspace, no exceptions

-

Separate out TPM PCRs assignments, by “owner” of measured

resources, so that resources can be bound to them in a fine-grained

fashion.

-

Allow easy pre-calculation of expected PCR values based on

booted kernel/initrd, configuration, local identity of the system

-

Rollback protection

-

Simple & robust updates: one updated file per concept

-

Updates without requiring re-enrollment/local preparation of the

TPM-protected resources (no more “brittle” PCR hashes that must be

propagated into every TPM-protected resource on each OS update)

-

System ready for easy remote attestation, to prove validity of

booted OS, configuration and local identity

-

Ability to bind secrets to specific phases of the boot, e.g. the

root fs encryption key should be retrievable from the TPM only in

the initrd, but not after the host transitioned into the root fs.

-

Reasonably secure, automatic, unattended unlocking of disk

encryption secrets should be possible.

-

“Democratize” use of PCR policies by defining PCR register meanings,

and making binding to them robust against updates, so that

external projects can safely and securely bind their own data to

them (or use them for remote attestation) without risking breakage

whenever the OS is updated.

-

Build around TPM 2.0 (with graceful fallback for TPM-less

systems if desired, but TPM 1.2 support is out of scope)

Considered attack scenarios and considerations:

-

Evil Maid: neither online nor offline (i.e. “at rest”), physical

access to a storage device should enable an attacker to read the

user’s plaintext data on disk (confidentiality); neither online nor

offline, physical access to a storage device should allow undetected

modification/backdooring of user data or OS (integrity), or

exfiltration of secrets.

-

TPMs are assumed to be reasonably “secure”, i.e. can securely

store/encrypt secrets. Communication to TPM is not “secure” though

and must be protected on the wire.

-

Similar, the CPU is assumed to be reasonably “secure”

-

SecureBoot is assumed to be reasonably “secure” to permit validated

boot up to and including shim+boot loader+kernel (but see discussion

below)

-

All user data must be encrypted and authenticated. All vendor and

administrator data must be authenticated.

-

It is assumed all software involved regularly contains

vulnerabilities and requires frequent updates to address them, plus

regular revocation of old versions.

-

It is further assumed that key material used for signing code by the

OS vendor can reasonably be kept secure (via use of HSM, and

similar, where secret key information never leaves the signing

hardware) and does not require frequent roll-over.

Proposed Construction

Central to the proposed design is the concept of a Unified Kernel

Image (UKI). These UKIs are the combination of a Linux kernel image,

and initrd, a UEFI boot stub program (and further resources, see

below) into one single UEFI PE file that can either be directly

invoked by the UEFI firmware (which is useful in particular in some

cloud/Confidential Computing environments) or through a boot loader

(which is generally useful to implement support for multiple kernel

versions, with interactive or automatic selection of image to boot

into, potentially with automatic fallback management to increase

robustness).

UKI Components

Specifically, UKIs typically consist of the following resources:

-

An UEFI boot stub that is a small piece of code still running in

UEFI mode and that transitions into the Linux kernel included in

the UKI (e.g., as implemented in

sd-stub,

see below)

-

The Linux kernel to boot in the .linux PE section

-

The initrd that the kernel shall unpack and invoke in the

.initrd PE section

-

A kernel command line string, in the .cmdline PE

section

-

Optionally, information describing the OS this kernel is intended

for, in the .osrel PE section (derived from

/etc/os-release of the booted OS). This is useful for

presentation of the UKI in the boot loader menu, and ordering it

against other entries, using the included version information.

-

Optionally, information describing kernel release information

(i.e. uname -r output) in the .uname PE

section. This is also useful for presentation of the UKI in the

boot loader menu, and ordering it against other entries.

-

Optionally, a boot splash to bring to screen before transitioning

into the Linux kernel in the .splash PE section

-

Optionally, a compiled Devicetree database file, for systems which

need it, in the .dtb PE section

-

Optionally, the public key in PEM format that matches the

signatures of the .pcrsig PE section (see below), in a

.pcrpkey PE section.

-

Optionally, a JSON file encoding expected PCR 11 hash values seen

from userspace once the UKI has booted up, along with signatures

of these expected PCR 11 hash values, matching a specific public

key in the .pcrsig PE section. (Note: we use plural

for “values” and “signatures” here, as this JSON file will

typically carry a separate value and signature for each PCR bank

for PCR 11, i.e. one pair of value and signature for the SHA1

bank, and another pair for the SHA256 bank, and so on. This

ensures when enrolling or unlocking a TPM-bound secret we’ll

always have a signature around matching the banks available

locally (after all, which banks the local hardware supports is up

to the hardware). For the sake of simplifying this already overly

complex topic, we’ll pretend in the rest of the text there was

only one PCR signature per UKI we have to care about, even if this

is not actually the case.)

Given UKIs are regular UEFI PE files, they can thus be signed as one

for SecureBoot, protecting all of the individual resources listed

above at once, and their combination. Standard Linux tools such as

sbsigntool and pesign can be used to sign

UKI files.

UKIs wrap all of the above data in a single file, hence all of the

above components can be updated in one go through single file atomic

updates, which is useful given that the primary expected storage place

for these UKIs is the UEFI System Partition (ESP), which is a vFAT

file system, with its limited data safety guarantees.

UKIs can be generated via a single, relatively simple objcopy

invocation, that glues the listed components together, generating one

PE binary that then can be signed for SecureBoot. (For details on

building these, see below.)

Note that the primary location to place UKIs in is the EFI System

Partition (or an otherwise firmware accessible file system). This

typically means a VFAT file system of some form. Hence an effective

UKI size limit of 4GiB is in place, as that’s the largest file size a

FAT32 file system supports.

Basic UEFI Stub Execution Flow

The mentioned UEFI stub program will execute the following operations

in UEFI mode before transitioning into the Linux kernel that is

included in its .linux PE section:

-

The PE sections listed are searched for in the invoked UKI the stub

is part of, and superficially validated (i.e. general file format is

in order).

-

All PE sections listed above of the invoked UKI are measured into

TPM PCR 11. This TPM PCR is expected to be all zeroes before the UKI

initializes. Pre-calculation is thus very straight-forward if the

resources included in the PE image are known. (Note: as a single

exception the .pcrsig PE section is excluded from this measurement,

as it is supposed to carry the expected result of the measurement, and

thus cannot also be input to it, see below for further details about

this section.)

-

If the .splash PE section is included in the UKI it is brought onto the screen

-

If the .dtb PE section is included in the UKI it is activated

using the Devicetree UEFI “fix-up” protocol

-

If a command line was passed from the boot loader to the UKI

executable it is discarded if SecureBoot is enabled and the command

line from the .cmdline used. If SecureBoot is disabled and a

command line was passed it is used in place of the one from

.cmdline. Either way the used command line is measured into TPM

PCR 12. (This of course removes any flexibility of control of the

kernel command line of the local user. In many scenarios this is

probably considered beneficial, but in others it is not, and some

flexibility might be desired. Thus, this concept probably needs to

be extended sooner or later, to allow more flexible kernel command

line policies to be enforced via definitions embedded into the

UKI. For example: allowing definition of multiple kernel command

lines the user/boot menu can select one from; allowing additional

allowlisted parameters to be specified; or even optionally allowing

any verification of the kernel command line to be turned off even

in SecureBoot mode. It would then be up to the builder of the UKI

to decide on the policy of the kernel command line.)

-

It will set a couple of volatile EFI variables to inform userspace

about executed TPM PCR measurements (and which PCR registers were

used), and other execution properties. (For example: the EFI

variable StubPcrKernelImage in the

4a67b082-0a4c-41cf-b6c7-440b29bb8c4f vendor namespace indicates

the PCR register used for the UKI measurement, i.e. the value

“11”).

-

An initrd cpio archive is dynamically synthesized from the

.pcrsig and .pcrpkey PE section data (this is later passed to

the invoked Linux kernel as additional initrd, to be overlaid with

the main initrd from the .initrd section). These files are later

available in the /.extra/ directory in the initrd context.

-

The Linux kernel from the .linux PE section is invoked with with

a combined initrd that is composed from the blob from the .initrd

PE section, the dynamically generated initrd containing the

.pcrsig and .pcrpkey PE sections, and possibly some additional

components like sysexts or syscfgs.

TPM PCR Assignments

In the construction above we take possession of two PCR registers

previously unused on generic Linux distributions:

-

TPM PCR 11 shall contain measurements of all components of the

UKI (with exception of the .pcrsig PE section, see above). This

PCR will also contain measurements of the boot phase once userspace

takes over (see below).

-

TPM PCR 12 shall contain measurements of the used kernel command

line. (Plus potentially other forms of

parameterization/configuration passed into the UKI, not discussed in

this document)

On top of that we intend to define two more PCR registers like this:

-

TPM PCR 15 shall contain measurements of the volume encryption

key of the root file system of the OS.

-

[TPM PCR 13 shall contain measurements of additional extension

images for the initrd, to enable a modularized initrd – not covered

by this document]

(See the Linux TPM PCR

Registry

for an overview how these four PCRs fit into the list of Linux PCR

assignments.)

For all four PCRs the assumption is that they are zero before the UKI

initializes, and only the data that the UKI and the OS measure into

them is included. This makes pre-calculating them straightforward:

given a specific set of UKI components, it is immediately clear what

PCR values can be expected in PCR 11 once the UKI booted up. Given a

kernel command line (and other parameterization/configuration) it is

clear what PCR values are expected in PCR 12.

Note that these four PCRs are defined by the conceptual “owner” of the

resources measured into them. PCR 11 only contains resources the OS

vendor controls. Thus it is straight-forward for the OS vendor to

pre-calculate and then cryptographically sign the expected values for

PCR 11. The PCR 11 values will be identical on all systems that run

the same version of the UKI. PCR 12 only contains resources the

administrator controls, thus the administrator can pre-calculate

PCR values, and they will be correct on all instances of the OS that

use the same parameters/configuration. PCR 15 only contains resources

inherently local to the local system, i.e. the cryptographic key

material that encrypts the root file system of the OS.

Separating out these three roles does not imply these actually need to

be separate when used. However the assumption is that in many popular

environments these three roles should be separate.

By separating out these PCRs by the owner’s role, it becomes

straightforward to remotely attest, individually, on the software that

runs on a node (PCR 11), the configuration it uses (PCR 12) or the

identity of the system (PCR 15). Moreover, it becomes straightforward

to robustly and securely encrypt data so that it can only be unlocked

on a specific set of systems that share the same OS, or the same

configuration, or have a specific identity – or a combination thereof.

Note that the mentioned PCRs are so far not typically used on generic

Linux-based operating systems, to our knowledge. Windows uses them,

but given that Windows and Linux should typically not be included in

the same boot process this should be unproblematic, as Windows’ use of

these PCRs should thus not conflict with ours.

To summarize:

| PCR |

Purpose |

Owner |

Expected Value before UKI boot |

Pre-Calculable |

| 11 |

Measurement of UKI components and boot phases |

OS Vendor |

Zero |

Yes

(at UKI build time) |

| 12 |

Measurement of kernel command line, additional kernel runtime configuration such as systemd credentials, systemd syscfg images |

Administrator |

Zero |

Yes

(when system configuration is assembled) |

| 13

|

System Extension Images of initrd

(and possibly more) |

(Administrator) |

Zero |

Yes

(when set of extensions is assembled) |

|

| 15

|

Measurement of root file system volume key

(Possibly later more: measurement of root file system UUIDs and labels and of the machine ID /etc/machine-id) |

Local System |

Zero |

Yes

(after first boot once ll such IDs are determined) |

Signature Keys

In the model above in particular two sets of private/public key pairs

are relevant:

-

The SecureBoot key to sign the UKI PE executable with. This controls

permissible choices of OS/kernel

-

The key to sign the expected PCR 11 values with. Signatures made

with this key will end up in the .pcrsig PE section. The public

key part will end up in the .pcrpkey PE section.

Typically the key pair for the PCR 11 signatures should be chosen with

a narrow focus, reused for exactly one specific OS (e.g. “Fedora

Desktop Edition”) and the series of UKIs that belong to it (all the

way through all the versions of the OS). The SecureBoot signature key

can be used with a broader focus, if desired. By keeping the PCR 11

signature key narrow in focus one can ensure that secrets bound to the

signature key can only be unlocked on the narrow set of UKIs desired.

TPM Policy Use

Depending on the intended access policy to a resource protected by the

TPM, one or more of the PCRs described above should be selected to

bind TPM policy to.

For example, the root file system encryption key should likely be

bound to TPM PCR 11, so that it can only be unlocked if a specific set

of UKIs is booted (it should then, once acquired, be measured into PCR

15, as discussed above, so that later TPM objects can be bound to it,

further down the chain). With the model described above this is

reasonably straight-forward to do:

-

When userspace wants to bind disk encryption to a specific series of

UKIs (“enrollment”), it looks for the public key passed to the

initrd in the /.extra/ directory (which as discussed above

originates in the .pcrpkey PE section of the UKI). The relevant

userspace component (e.g. systemd) is then responsible for

generating a random key to be used as symmetric encryption key for

the storage volume (let’s call it disk encryption key _here,

DEK_). The TPM is then used to encrypt (“seal”) the DEK with its

internal Storage Root Key (TPM SRK). A TPM2 policy is bound to the

encrypted DEK. The policy enforces that the DEK may only be

decrypted if a valid signature is provided that matches the state of

PCR 11 and the public key provided in the /.extra/ directory of

the initrd. The plaintext DEK key is passed to the kernel to

implement disk encryption (e.g. LUKS/dm-crypt). (Alternatively,

hardware disk encryption can be used too, i.e. Intel MKTME, AMD SME

or even OPAL, all of which are outside of the scope of this

document.) The TPM-encrypted version of the DEK which the TPM

returned is written to the encrypted volume’s superblock.

-

When userspace wants to unlock disk encryption on a specific

UKI, it looks for the signature data passed to the initrd in the

/.extra/ directory (which as discussed above originates in the

.pcrsig PE section of the UKI). It then reads the encrypted

version of the DEK from the superblock of the encrypted volume. The

signature and the encrypted DEK are then passed to the TPM. The TPM

then checks if the current PCR 11 state matches the supplied

signature from the .pcrsig section and the public key used during

enrollment. If all checks out it decrypts (“unseals”) the DEK and

passes it back to the OS, where it is then passed to the kernel

which implements the symmetric part of disk encryption.

Note that in this scheme the encrypted volume’s DEK is not bound

to specific literal PCR hash values, but to a public key which is

expected to sign PCR hash values.

Also note that the state of PCR 11 only matters during unlocking. It

is not used or checked when enrolling.

In this scenario:

-

Input to the TPM part of the enrollment process are the TPM’s

internal SRK, the plaintext DEK provided by the OS, and the public

key later used for signing expected PCR values, also provided by the

OS. – Output is the encrypted (“sealed”) DEK.

-

Input to the TPM part of the unlocking process are the TPM’s

internal SRK, the current TPM PCR 11 values, the public key used

during enrollment, a signature that matches both these PCR values

and the public key, and the encrypted DEK. – Output is the plaintext

(“unsealed”) DEK.

Note that sealing/unsealing is done entirely on the TPM chip, the host

OS just provides the inputs (well, only the inputs that the TPM chip

doesn’t know already on its own), and receives the outputs. With the

exception of the plaintext DEK, none of the inputs/outputs are

sensitive, and can safely be stored in the open. On the wire the

plaintext DEK is protected via TPM parameter encryption (not discussed

in detail here because though important not in scope for this

document).

TPM PCR 11 is the most important of the mentioned PCRs, and its use is

thus explained in detail here. The other mentioned PCRs can be used in

similar ways, but signatures/public keys must be provided via other

means.

This scheme builds on the functionality Linux’ LUKS2 functionality

provides, i.e. key management supporting multiple slots, and the

ability to embed arbitrary metadata in the encrypted volume’s

superblock. Note that this means the TPM2-based logic explained here

doesn’t have to be the only way to unlock an encrypted volume. For

example, in many setups it is wise to enroll both this TPM-based

mechanism and an additional “recovery key” (i.e. a high-entropy

computer generated passphrase the user can provide manually in case

they lose access to the TPM and need to access their data), of which

either can be used to unlock the volume.

Boot Phases

Secrets needed during boot-up (such as the root file system encryption

key) should typically not be accessible anymore afterwards, to protect

them from access if a system is attacked during runtime. To implement

this the scheme above is extended in one way: at certain milestones of

the boot process additional fixed “words” should be measured into PCR

11. These milestones are placed at conceptual security boundaries,

i.e. whenever code transitions from a higher privileged context to a

less privileged context.

Specifically:

-

When the initrd initializes (“initrd-enter”)

-

When the initrd transitions into the root file system (“initrd-leave”)

-

When the early boot phase of the OS on the root file system has

completed, i.e. all storage and file systems have been set up and

mounted, immediately before regular services are started

(“sysinit”)

-

When the OS on the root file system completed the boot process far

enough to allow unprivileged users to log in (“complete”)

-

When the OS begins shut down (“shutdown”)

-

When the service manager is mostly finished with shutting down and

is about to pass control to the final phase of the shutdown logic

(“final”)

By measuring these additional words into PCR 11 the distinct phases of

the boot process can be distinguished in a relatively straight-forward

fashion and the expected PCR values in each phase can be determined.

The phases are measured into PCR 11 (as opposed to some other PCR)

mostly because available PCRs are scarce, and the boot phases defined

are typically specific to a chosen OS, and hence fit well with the

other data measured into PCR 11: the UKI which is also specific to the

OS. The OS vendor generates both the UKI and defines the boot phases,

and thus can safely and reliably pre-calculate/sign the expected PCR

values for each phase of the boot.

Revocation/Rollback Protection

In order to secure secrets stored at rest, in particular in

environments where unattended decryption shall be possible, it is

essential that an attacker cannot use old, known-buggy – but properly

signed – versions of software to access them.

Specifically, if disk encryption is bound to an OS vendor (via UKIs

that include expected PCR values, signed by the vendor’s public key)

there must be a mechanism to lock out old versions of the OS or UKI

from accessing TPM based secrets once it is determined that the old

version is vulnerable.

To implement this we propose making use of one of the “counters” TPM

2.0 devices provide: integer registers that are persistent in the TPM

and can only be increased on request of the OS, but never be

decreased. When sealing resources to the TPM, a policy may be declared

to the TPM that restricts how the resources can later be unlocked:

here we use one that requires that along with the expected PCR values

(as discussed above) a counter integer range is provided to the TPM

chip, along with a suitable signature covering both, matching the

public key provided during sealing. The sealing/unsealing mechanism

described above is thus extended: the signature passed to the TPM

during unsealing now covers both the expected PCR values and the

expected counter range. To be able to use a signature associated with

an UKI provided by the vendor to unseal a resource, the counter thus

must be at least increased to the lower end of the range the signature

is for. By doing so the ability is lost to unseal the resource for

signatures associated with older versions of the UKI, because their

upper end of the range disables access once the counter has been

increased far enough. By carefully choosing the upper and lower end of

the counter range whenever the PCR values for an UKI shall be signed

it is thus possible to ensure that updates can invalidate prior

versions’ access to resources. By placing some space between the upper

and lower end of the range it is possible to allow a controlled level

of fallback UKI support, with clearly defined milestones where

fallback to older versions of an UKI is not permitted anymore.

Example: a hypothetical distribution FooOS releases a regular stream

of UKI kernels 5.1, 5.2, 5.3, … It signs the expected PCR values for

these kernels with a key pair it maintains in a HSM. When signing UKI

5.1 it includes information directed at the TPM in the signed data

declaring that the TPM counter must be above 100, and below 120, in

order for the signature to be used. Thus, when the UKI is booted up

and used for unlocking an encrypted volume the unlocking code must

first increase the counter to 100 if needed, as the TPM will otherwise

refuse unlocking the volume. The next release of the UKI, i.e. UKI 5.2

is a feature release, i.e. reverting back to the old kernel locally is

acceptable. It thus does not increase the lower bound, but it

increases the upper bound for the counter in the signature payload,

thus encoding a valid range 100…121 in the signed payload. Now a major

security vulnerability is discovered in UKI 5.1. A new UKI 5.3 is

prepared that fixes this issue. It is now essential that UKI 5.1 can

no longer be used to unlock the TPM secrets. Thus UKI 5.3 will bump

the lower bound to 121, and increase the upper bound by one, thus

allowing a range 121…122. Or in other words: for each new UKI release

the signed data shall include a counter range declaration where the

upper bound is increased by one. The lower range is left as-is between

releases, except when an old version shall be cut off, in which case

it is bumped to one above the upper bound used in that release.

UKI Generation

As mentioned earlier, UKIs are the combination of various resources

into one PE file. For most of these individual components there are

pre-existing tools to generate the components. For example the

included kernel image can be generated with the usual Linux kernel

build system. The initrd included in the UKI can be generated with

existing tools such as dracut and similar. Once the basic components

(.linux, .initrd, .cmdline, .splash, .dtb, .osrel,

.uname) have been acquired the combination process works roughly

like this:

-

The expected PCR 11 hashes (and signatures for them) for the UKI

are calculated. The tool for that takes all basic UKI components

and a signing key as input, and generates a JSON object as output

that includes both the literal expected PCR hash values and a

signature for them. (For all selected TPM2 banks)

-

The EFI stub binary is now combined with the basic components, the

generated JSON PCR signature object from the first step (in the

.pcrsig section) and the public key for it (in the .pcrpkey

section). This is done via a simple “objcopy” invocation

resulting in a single UKI PE binary.

-

The resulting EFI PE binary is then signed for SecureBoot (via a

tool such as

sbsign

or similar).

Note that the UKI model implies pre-built initrds. How to generate

these (and securely extend and parameterize them) is outside of the

scope of this document, but a related document will be provided

highlighting these concepts.

Protection Coverage of SecureBoot Signing and PCRs

The scheme discussed here touches both SecureBoot code signing and TPM

PCR measurements. These two distinct mechanisms cover separate parts

of the boot process.

Specifically:

-

Firmware/Shim SecureBoot signing covers bootloader and UKI

-

TPM PCR 11 covers the UKI components and boot phase

-

TPM PCR 12 covers admin configuration

-

TPM PCR 15 covers the local identity of the host

Note that this means SecureBoot coverage ends once the system

transitions from the initrd into the root file system. It is assumed

that trust and integrity have been established before this transition

by some means, for example LUKS/dm-crypt/dm-integrity, ideally bound

to PCR 11 (i.e. UKI and boot phase).

A robust and secure update scheme for PCR 11 (i.e. UKI) has been

described above, which allows binding TPM-locked resources to a

UKI. For PCR 12 no such scheme is currently designed, but might be

added later (use case: permit access to certain secrets only if the

system runs with configuration signed by a specific set of

keys). Given that resources measured into PCR 15 typically aren’t

updated (or if they are updated loss of access to other resources

linked to them is desired) no update scheme should be necessary for

it.

This document focuses on the three PCRs discussed above. Disk

encryption and other userspace may choose to also bind to other

PCRs. However, doing so means the PCR brittleness issue returns that

this design is supposed to remove. PCRs defined by the various

firmware UEFI/TPM specifications generally do not know any concept for

signatures of expected PCR values.

It is known that the industry-adopted SecureBoot signing keys are too

broad to act as more than a denylist for known bad code. It is thus

probably a good idea to enroll vendor SecureBoot keys wherever

possible (e.g. in environments where the hardware is very well known,

and VM environments), to raise the bar on preparing rogue UKI-like PE

binaries that will result in PCR values that match expectations but

actually contain bad code. Discussion about that is however outside of

the scope of this document.

Whole OS embedded in the UKI

The above is written under the assumption that the UKI embeds an

initrd whose job it is to set up the root file system: find it,

validate it, cryptographically unlock it and similar. Once the root

file system is found, the system transitions into it.

While this is the traditional design and likely what most systems will

use, it is also possible to embed a regular root file system into the

UKI and avoid any transition to an on-disk root file system. In this

mode the whole OS would be encapsulated in the UKI, and

signed/measured as one. In such a scenario the whole of the OS must be

loaded into RAM and remain there, which typically restricts the

general usability of such an approach. However, for specific purposes

this might be the design of choice, for example to implement

self-sufficient recovery or provisioning systems.

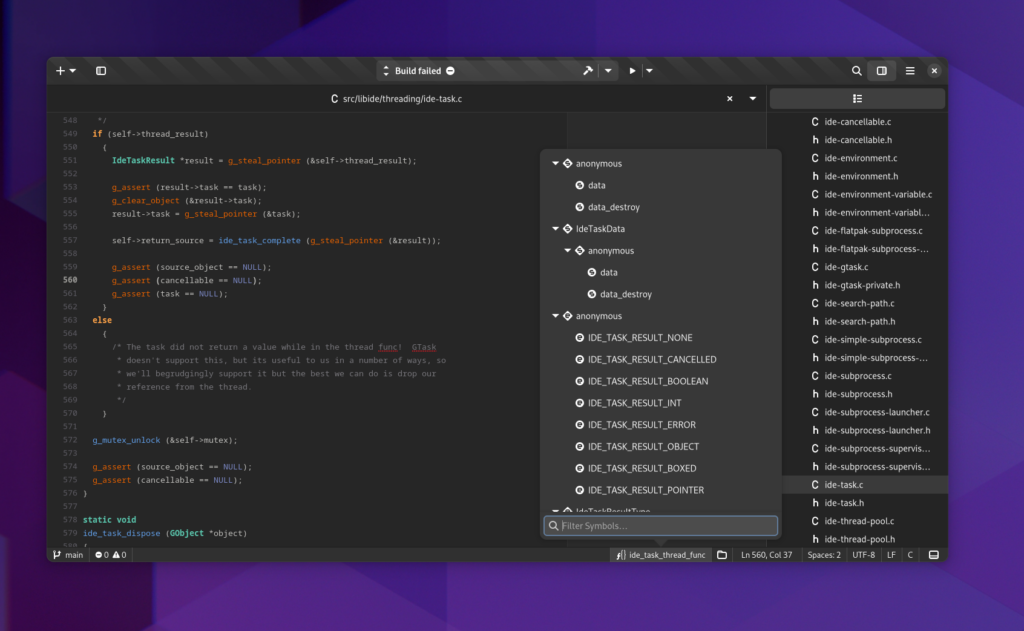

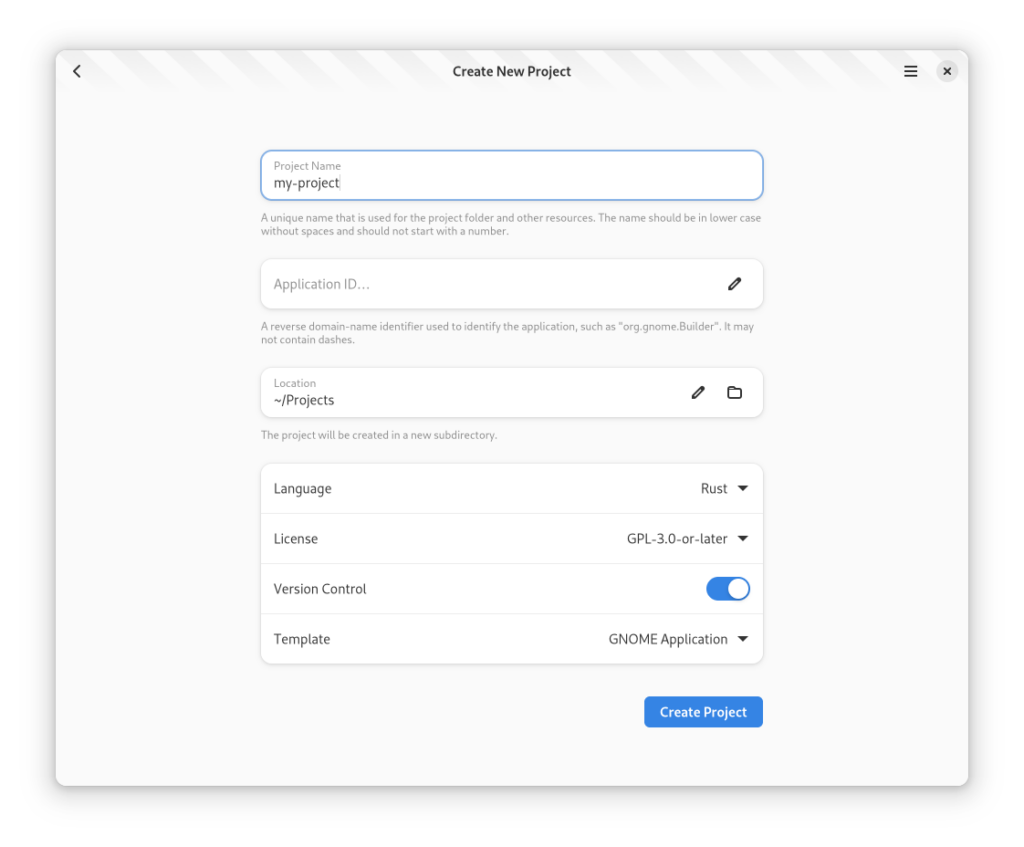

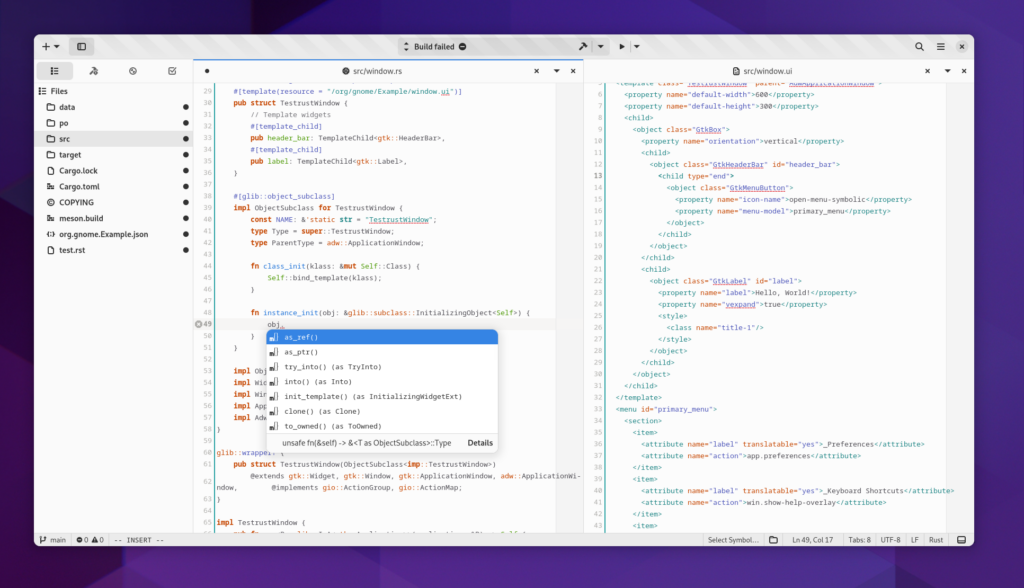

Proposed Implementations & Current Status

The toolset for most of the above is already implemented in systemd and related projects in one way or another. Specifically:

-

The

systemd-stub

(or short: sd-stub) component implements the discussed UEFI stub

program

-

The

systemd-measure

tool can be used to pre-calculate expected PCR 11 values given the

UKI components and can sign the result, as discussed in the UKI

Image Generation section above.

-

The

systemd-cryptenroll

and

systemd-cryptsetup

tools can be used to bind a LUKS2 encrypted file system volume to a

TPM and PCR 11 public key/signatures, according to the scheme

described above. (The two components also implement a “recovery

key” concept, as discussed above)

-

The

systemd-pcrphase

component measures specific words into PCR 11 at the discussed

phases of the boot process.

-

The

systemd-creds

tool may be used to encrypt/decrypt data objects called

“credentials” that can be passed into services and booted systems,

and are automatically decrypted (if needed) immediately before

service invocation. Encryption is typically bound to the local TPM,

to ensure the data cannot be recovered elsewhere.

Note that

systemd-stub

(i.e. the UEFI code glued into the UKI) is distinct from

systemd-boot

(i.e. the UEFI boot loader than can manage multiple UKIs and other

boot menu items and implements automatic fallback, an interactive menu

and a programmatic interface for the OS among other things). One can

be used without the other – both sd-stub without sd-boot and vice

versa – though they integrate nicely if used in combination.

Note that the mechanisms described are relatively generic, and can be

implemented and be consumed in other software too, systemd should be

considered a reference implementation, though one that found

comprehensive adoption across Linux distributions.

Some concepts discussed above are currently not

implemented. Specifically:

-

The rollback protection logic is currently not implemented.

-

The mentioned measurement of the root file system volume key to PCR

15 is implemented, but not merged into the systemd main branch yet.

The UAPI Group

We recently started a new group for discussing concepts and

specifications of basic OS components, including UKIs as described

above. It's called the UAPI Group. Please

have a look at the various documents and specifications already

available there, and expect more to come. Contributions welcome!

Glossary

TPM

Trusted Platform Module; a security chip found in many modern

systems, both physical systems and increasingly also in virtualized

environments. Traditionally a discrete chip on the mainboard but today

often implemented in firmware, and lately directly in the CPU SoC.

PCR

Platform Configuration Register; a set of registers on a TPM that

are initialized to zero at boot. The firmware and OS can “extend”

these registers with hashes of data used during the boot process and

afterwards. “Extension” means the supplied data is first

cryptographically hashed. The resulting hash value is then combined

with the previous value of the PCR and the combination hashed

again. The result will become the new value of the PCR. By doing this

iteratively for all parts of the boot process (always with the data

that will be used next during the boot process) a concept of

“Measured Boot” can be implemented: as long as every element in the

boot chain measures (i.e. extends into the PCR) the next part of the

boot like this, the resulting PCR values will prove cryptographically

that only a certain set of boot components can have been used to boot

up. A standards compliant TPM usually has 24 PCRs, but more than half

of those are already assigned specific meanings by the firmware. Some

of the others may be used by the OS, of which we use four in the

concepts discussed in this document.

Measurement

The act of “extending” a PCR with some data object.

SRK

Storage Root Key; a special cryptographic key generated by a TPM

that never leaves the TPM, and can be used to encrypt/decrypt data

passed to the TPM.

UKI

Unified Kernel Image; the concept this document is about. A

combination of kernel, initrd and other resources. See above.

SecureBoot

A mechanism where every software component involved in the boot

process is cryptographically signed and checked against a set of

public keys stored in the mainboard hardware, implemented in firmware,

before it is used.

Measured Boot

A boot process where each component measures (i.e., hashes and extends

into a TPM PCR, see above) the next component it will pass control to

before doing so. This serves two purposes: it can be used to bind

security policy for encrypted secrets to the resulting PCR values (or

signatures thereof, see above), and it can be used to reason about

used software after the fact, for example for the purpose of remote

attestation.

initrd

Short for “initial RAM disk”, which – strictly speaking – is a

misnomer today, because no RAM disk is anymore involved, but a tmpfs

file system instance. Also known as “initramfs”, which is also

misleading, given the file system is not ramfs anymore, but tmpfs

(both of which are in-memory file systems on Linux, with different

semantics). The initrd is passed to the Linux kernel and is

basically a file system tree in cpio archive. The kernel unpacks the

image into a tmpfs (i.e., into an in-memory file system), and then

executes a binary from it. It thus contains the binaries for the first

userspace code the kernel invokes. Typically, the initrd’s job is to

find the actual root file system, unlock it (if encrypted), and

transition into it.

UEFI

Short for “Unified Extensible Firmware Interface”, it is a widely

adopted standard for PC firmware, with native support for SecureBoot

and Measured Boot.

EFI

More or less synonymous to UEFI, IRL.

Shim

A boot component originating in the Linux world, which in a way

extends the public key database SecureBoot maintains (which is under

control from Microsoft) with a second layer (which is under control of

the Linux distributions and of the owner of the physical device).

PE

Portable Executable; a file format for executable binaries,

originally from the Windows world, but also used by UEFI firmware. PE

files may contain code and data, categorized in labeled “sections”

ESP

EFI System Partition; a special partition on a storage

medium that the firmware is able to look for UEFI PE binaries

in to execute at boot.

HSM

Hardware Security Module; a piece of hardware that can generate and

store secret cryptographic keys, and execute operations with them,

without the keys leaving the hardware (though this is

configurable). TPMs can act as HSMs.

DEK

Disk Encryption Key; an asymmetric cryptographic key used for

unlocking disk encryption, i.e. passed to LUKS/dm-crypt for activating

an encrypted storage volume.

LUKS2

Linux Unified Key Setup Version 2; a specification for a superblock

for encrypted volumes widely used on Linux. LUKS2 is the default

on-disk format for the cryptsetup suite of tools. It provides

flexible key management with multiple independent key slots and allows

embedding arbitrary metadata in a JSON format in the superblock.

Thanks

I’d like to thank Alain Gefflaut, Anna Trikalinou, Christian Brauner,

Daan de Meyer, Luca Boccassi, Zbigniew Jędrzejewski-Szmek for

reviewing this text.