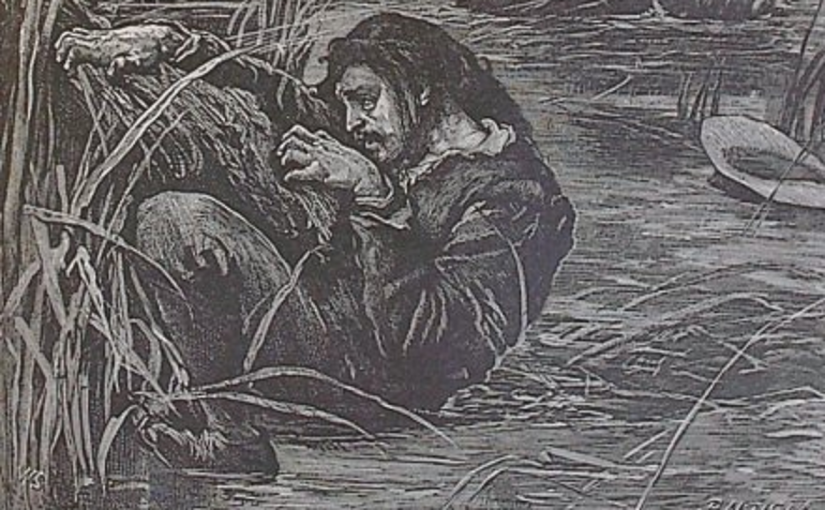

(The picture on this post is from Pilgram’s Progress as Christian stuggles in the slough of despond. I feel his pain here.)

I have a couple of use cases that require pulling from an external data source into MediaWiki. Specifically, they need to pull information such as employee data in Active Directory or a company-wide taxonomy that is maintained outside of MediaWiki. Lucky for me, there is thePage Forms extention.

Page Forms provides a couple of ways to do this: directly from an outside source or using the methods provided by the External Data extension.

Since I was going to have to write some sort of PHP shim, anyway, I decided to go with the first method.

Writing the PHP script to provide possible completions when it was given a string was the easy part. As a proof of concept, I took a the list of words in /usr/share/dict/words on my laptop, trimed it to 1/10th its size using

sed -n ‘0~20p' /usr/share/dict/words > short.txt

and used a simple PHP script (hosted on winkyfrown.com) to provide the data.

That script is the result of a bit of a struggle. Despite the fact that the documentation pointed to a working example (after I updated it, natch), that wasn’t clear enough for me. I had to spend a few hours poking through the source and instrumenting the code to find the answer.

And that is the reason for this weblog post. I posted the same thing earlier today to the Semantic Mediawiki Users mailing list after an earlier plea for help. What resulted is the following stream-of-conciousness short story:

I must be doing something wrong because I keep seeing this error in the

js console (in addition to not seeing any results):

TypeError: text is undefined 1 ext.sf.select2.base.js:251:4

removeDiacritics https://example.dom/w/extensions/SemanticForms/libs/ext.sf.select2.base.js:251:4

textHighlight https://example.dom/w/extensions/SemanticForms/libs/ext.sf.select2.base.js:258:23

formatResult https://example.dom/w/extensions/SemanticForms/libs/ext.sf.select2.base.js:100:15

populate https://example.dom/w/extensions/SemanticForms/libs/select2.js:920:39

populateResults https://example.dom/w/extensions/SemanticForms/libs/select2.js:942:21

updateResults/<.callback< https://example.dom/w/extensions/SemanticForms/libs/select2.js:1732:17

bind/< https://example.dom/w/extensions/SemanticForms/libs/select2.js:672:17

success https://example.dom/w/extensions/SemanticForms/libs/select2.js:460:25

fire https://example.dom/w/load.php:3148:10

fireWith https://example.dom/w/load.php:3260:7

done https://example.dom/w/load.php:9314:5

callback https://example.dom/w/load.php:9718:8

The URL http://example.dom/custom-autocomplete.php?l=lines&f=words

shows all the lines from the source (in this case, every 10th line from

/usr/share/dict/words) that matches “lines”. This example results in:

{"sfautocomplete":

{"2435":{"id":"borderlines",

"value":"borderlines",

"label":"borderlines",

"text":"borderlines"},

…

In my php script, I blatted the value over the keys “id”, “value”, “label” and “text”

because I saw each of them being use, but not why.

Anyway, PF is configured to read this correctly, so I can see that when

the user types “lines” an XHR request is made for

https://example.dom/w/api.php?action=sfautocomplete&format=json&external_url=tempURL&substr=lines&_=1494345628246

and it returns

{"warnings": {

"main": {

"*": "Unrecognized parameter: '_'"

}

},

"sfautocomplete": [

{

"id": "borderlines",

"value": "borderlines",

"label": "borderlines",

"text": "borderlines"

}, ....

So far, so good.

I’m instrumenting the code for select2.js (console.log() is your friend!) and I can see that by the time we get to its populate() method we have a list of objects that look like this:

Object { id: 0, value: "borderlines", label: "borderlines", text: undefined }

Ok, I can see it substituting its own id so I’ll take that out of my

results.

There is no difference. (Well, the ordering is different — id now comes

at the end — but that is to be expected.)

Now, what happens if I take out text?

Same thing. Ordering is different, but still shows up as undefined.

Output from my custom autocompleter now looks like this:

{"sfautocomplete":

{"2435":{"value":"borderlines",

"label":"borderlines"},

…

and the SMWApi is now giving

{"warnings": {

"main": {

"*": "Unrecognized parameter: '_'"

}

},

"sfautocomplete": [

{

"value": "borderlines",

"label": "borderlines"

}, ....

Still the same problem. Let me try Hermann’s suggestion and make my

output look like:

{"sfautocomplete":

[

{"borderlines":”borderlines”},

....

Still, no results. The resulting object does look like this, though:

Object { id: 0, borderline: "borderlines", label: "borderlines", text: undefined }

Looking at my instrumented code and the traceback, I have found that the

transformation takes place in the call

options.results(data, query.page);

at the success callback around line 460 in select2.js. This leads us back to ajaxOpts.results() at line 251 in ext.sf.select2.tokens.js (since this is the token input method I’m looking at) and, yep, it looks like I should be putting something in the title attribute.

And, yay!, after changing the output of my custom autocomplete script to:

{"sfautocomplete":

[

{"title":”borderlines”,

“value”: ”borderlines”},

....

the autocompletes start working. In fact, putting

{"sfautocomplete":

[

{"title":”borderlines”}

....

is enough.

If you made it this far, you’ll know that I should have just copied the example I found when I updated the page on MW.o, but then I wouldn’t have understood this as well as I do now. Instead, I used what I learned to provide an example in the documentation that even I wouldn’t miss.

(Image is public domain from the Henry Altemus edition of John Bunyan’s Pilgrim’s Progress, Philadelphia, PA, 1890. Illustrations by Frederick Barnard, J.D. Linton, W. Small, etc. Engraved by Dalziel Brothers. Elarged detail from Wikimedia Commons uploaded by by User:Dr_Jorgen.)